Toxicity - a Hugging Face Space by evaluate-measurement

Por um escritor misterioso

Descrição

The toxicity measurement aims to quantify the toxicity of the input texts using a pretrained hate speech classification model.

Businesses tackle practical realities of hybrid models

Combined metrics ignore some arguments · Issue #423 · huggingface/evaluate · GitHub

Orca: Progressive Learning from Complex Explanation Traces of GPT-4 Paper Reading - Arize AI

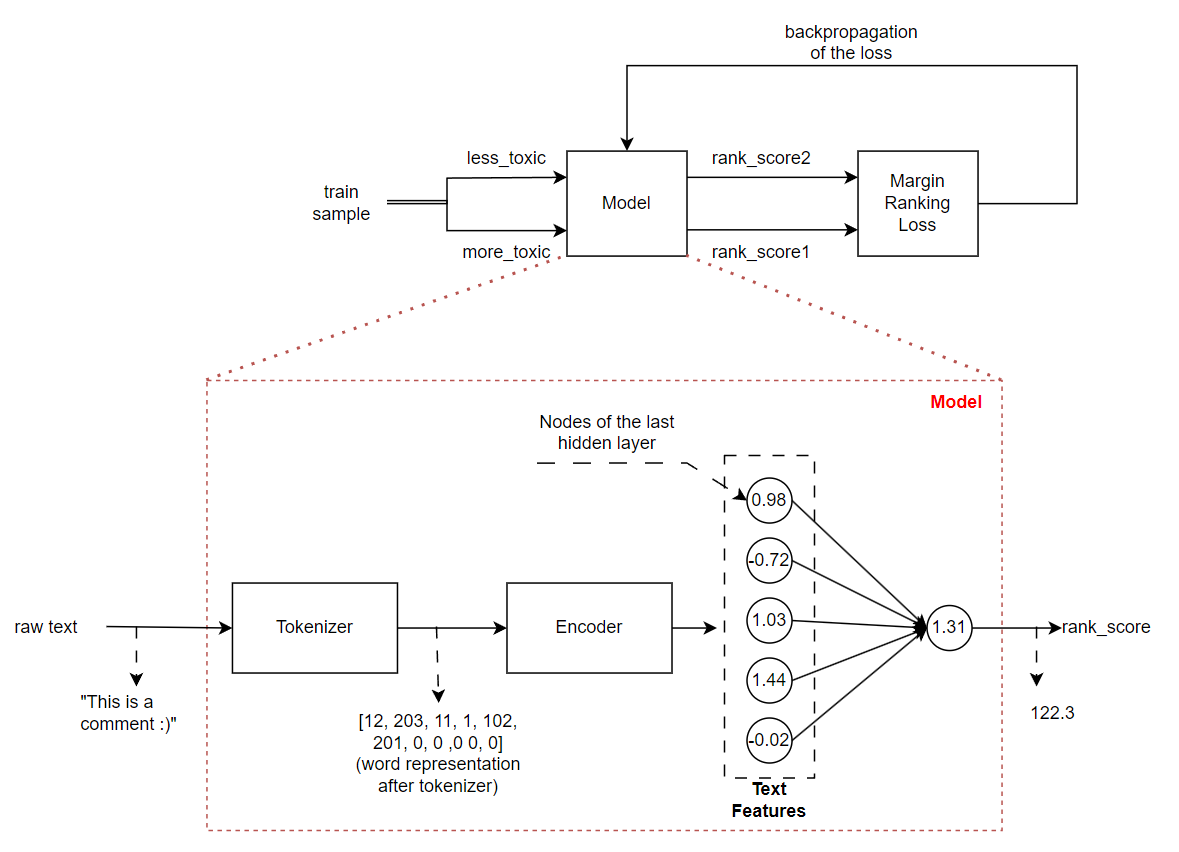

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

Hugging Face Fights Biases with New Metrics

Holistic Evaluation of Language Models - Bommasani - 2023 - Annals of the New York Academy of Sciences - Wiley Online Library

FMOps/LLMOps: Operationalize generative AI and differences with MLOps

Pre-Trained Language Models and Their Applications - ScienceDirect

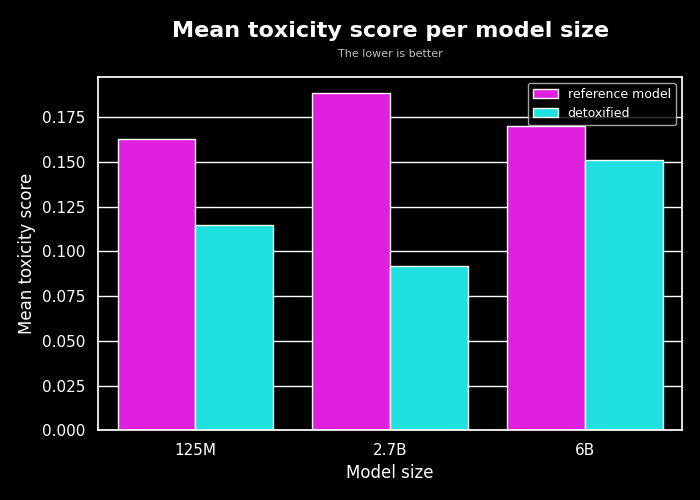

Detoxifying a Language Model using PPO

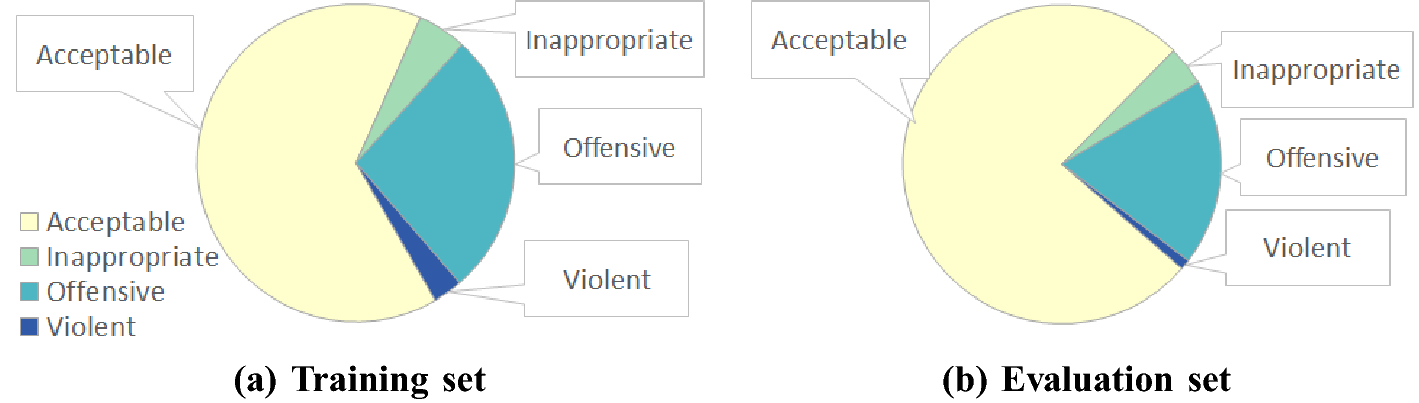

Jigsaw Unintended Bias in Toxicity Classification, by Pulkit Ratna Ganjeer

Human Evaluation of Large Language Models: How Good is Hugging Face's BLOOM?

Text generation with GPT-2 - Model Differently

Dynamics of online hate and misinformation

ChemGPT outputs are controllable via sampling strategy and prompt

de

por adulto (o preço varia de acordo com o tamanho do grupo)