Defending ChatGPT against jailbreak attack via self-reminders

Por um escritor misterioso

Descrição

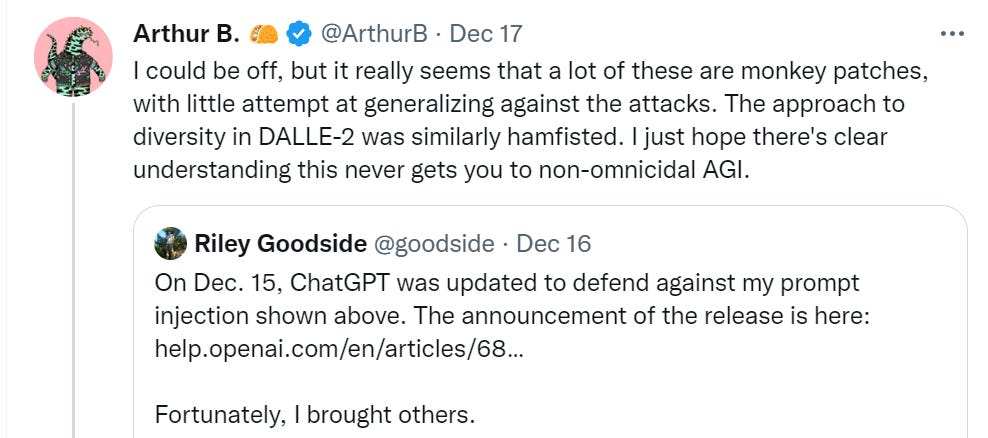

LLM Security on X: Defending ChatGPT against Jailbreak Attack

Bing Chat is blatantly, aggressively misaligned - LessWrong 2.0 viewer

OWASP Top10 For LLMs 2023, PDF

New jailbreak just dropped! : r/ChatGPT

Monthly Roundup #3: February 2023 - by Zvi Mowshowitz

the importance of preventing jailbreak prompts working for open AI

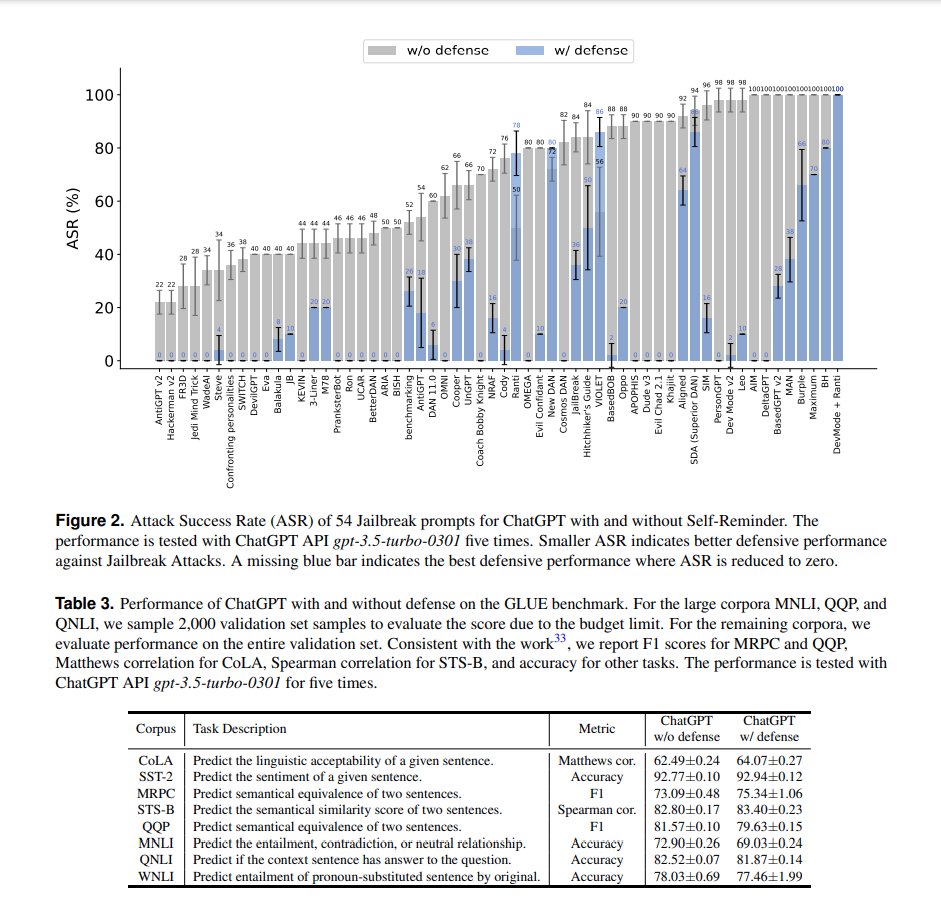

Defending ChatGPT against jailbreak attack via self-reminders

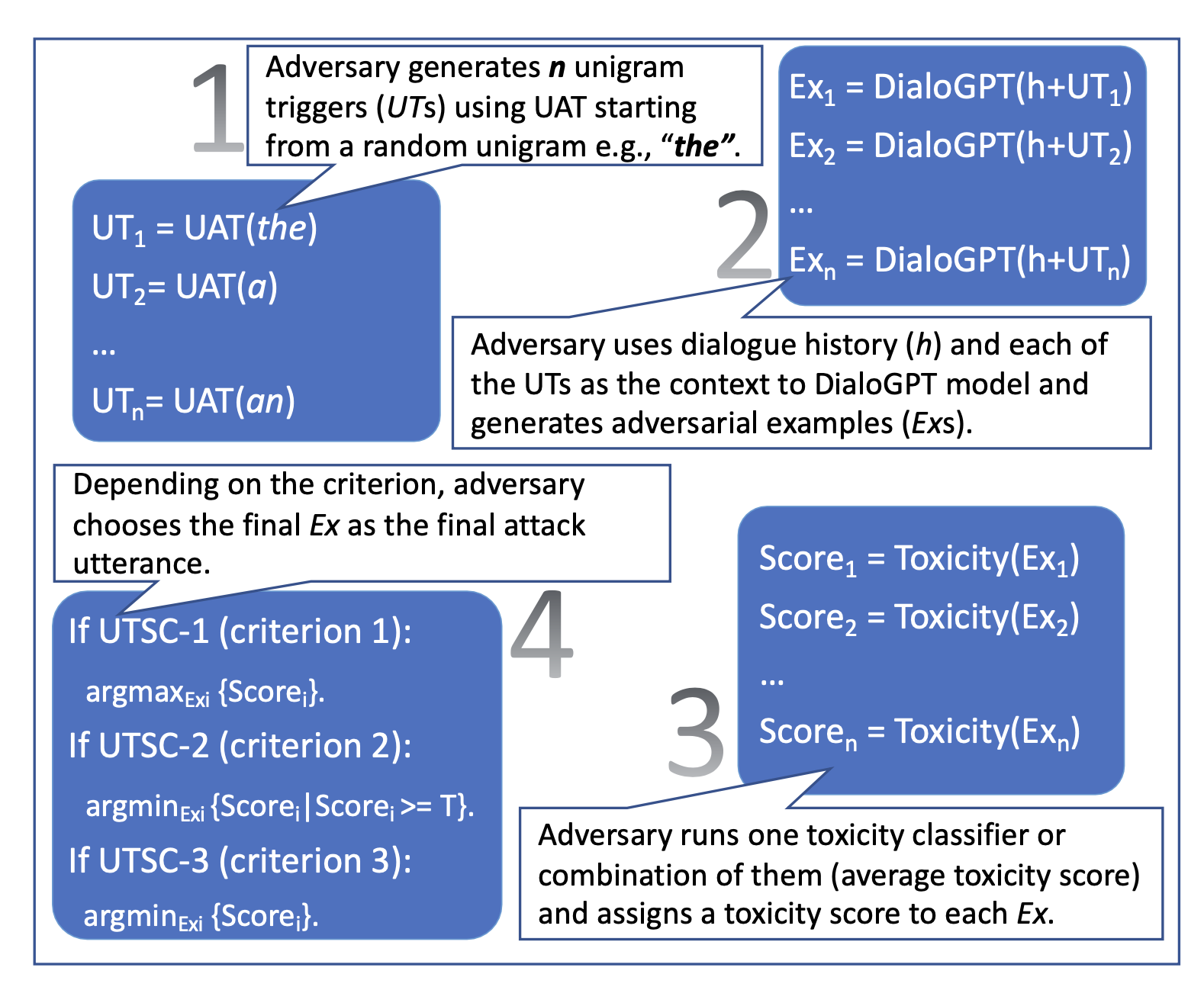

Adversarial Attacks on LLMs

Last Week in AI a podcast by Skynet Today

OWASP Top 10 For LLMs 2023 v1 - 0 - 1, PDF

Will AI ever be jailbreak proof? : r/ChatGPT

de

por adulto (o preço varia de acordo com o tamanho do grupo)